Unsupervised Learning of Variational Autoencoders on Cortex-M Microcontrollers

Published in IEEE 18th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), 2025

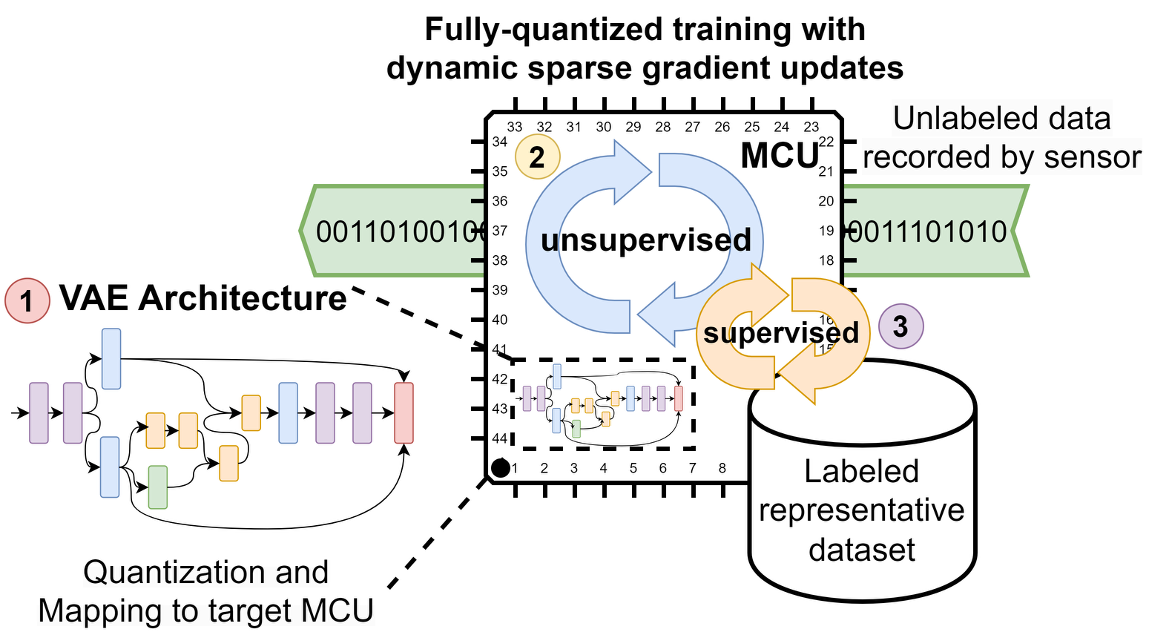

Training and fine-tuning deep neural networks (DNNs) to adapt to new and unseen data at the edge has recently attracted considerable research interest. However, most work to date has focused on supervised learning of pre-trained, quantized DNNs. Although these proposed techniques are advantageous in enabling DNN training even on resource-constrained devices such as microcontroller units (MCUs), they do not address the issue that large amounts of labeled data are required to perform supervised training. Moreover, while data are readily available at the edge, ground-truth labels are typically not. In this work, we explore variational autoencoders as a way to train unsupervised, i.e., without labels, feature extractors for image classification on a Cortex-M MCU. Using these feature extractors, we then train classification heads using small labeled representative sets of data to solve classification tasks. Our approach significantly reduces the amount of labeled data required, while enabling full DNN training from scratch on resource-constrained devices.

Recommended citation: Deutel, M., Plinge, A., Seuß, D., Mutschler, C., Hannig, F., & Teich, J. (2025). Unsupervised Learning of Variational Autoencoders on Cortex-M Microcontrollers. IEEE 18th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC).

Download Paper | Download Paper (open) | Download Slides