Energy-efficient Deployment of Deep Learning Applications on Cortex-M based Microcontrollers using Deep Compression

Published in MBMV; 26th Workshop, 2023

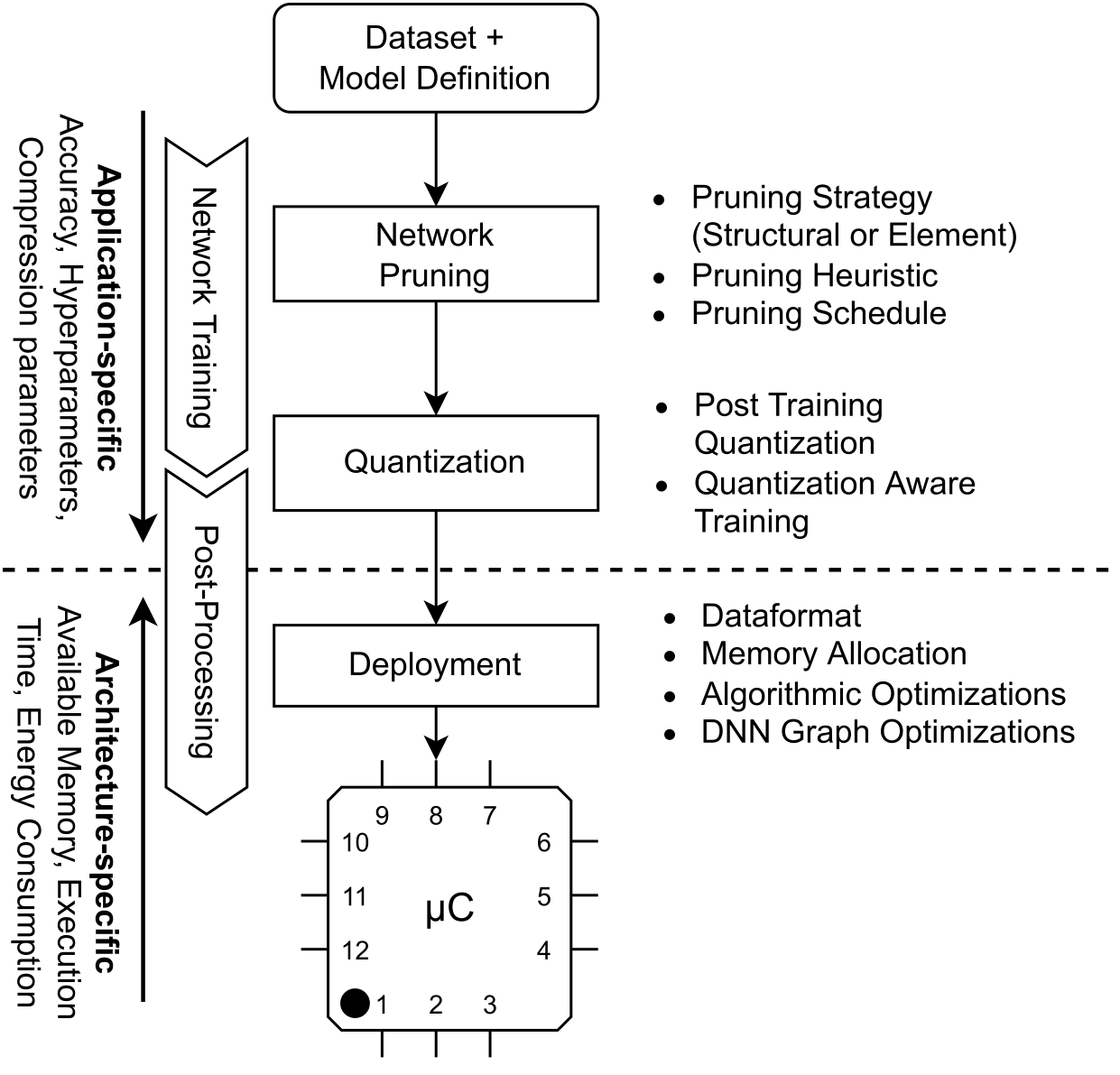

Large Deep Neural Networks (DNNs) are the backbone of today’s artificial intelligence due to their ability to make accurate predictions when being trained on huge datasets. With advancing technologies, such as the Internet of Things, interpreting large quantities of data generated by sensors is becoming an increasingly important task. However, in many applications not only the predictive performance but also the energy consumption of deep learning models is of major interest. This paper investigates the efficient deployment of deep learning models on resource-constrained microcontroller architectures via network compression. We present a methodology for the systematic exploration of different DNN pruning, quantization, and deployment strategies, targeting different ARM Cortex-M based low-power systems. The exploration allows to analyze trade-offs between key metrics such as accuracy, memory consumption, execution time, and power consumption. We discuss experimental results on three different DNN architectures and show that we can compress them to below 10% of their original parameter count before their predictive quality decreases. This also allows us to deploy and evaluate them on Cortex-M based microcontrollers.

Recommended citation: Deutel, M., Woller, P., Mutschler, C., & Teich, J. (2023, March). Energy-efficient Deployment of Deep Learning Applications on Cortex-M based Microcontrollers using Deep Compression. In MBMV 2023; 26th Workshop (pp. 1-12). VDE.

Download Paper | Download Paper (open) | Download Slides